Table Of Contents:

A.) Introduction

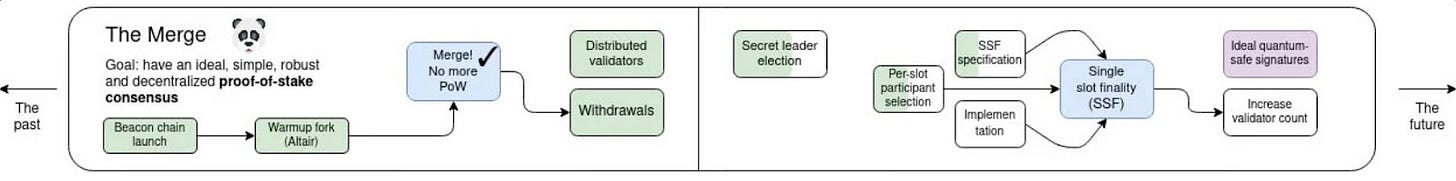

B.) Roadmap Overview

Section 1: The Merge

Beacon Chain Launch

Warmup Fork (Altair)

The Merge

Withdrawals

Distributed Validators (DVT)

Single Slot Finality (SSF)

Secret Leader Election (SLE)

Other Fork-Choice Improvements

View-Merge

Quantum-Safe Signatures

Increase Validator Count

Section 2: The Surge

EIP-4844 Specification

Handful of zk-EVMs

Optimistic Rollup Fraud Provers (Whitelisted Only)

EIP-4844 (Basic Rollup Scaling)

Danksharding (Full Rollup Scaling)

Optimistic Rollup Fraud Provers

Quantum-Safe and Trusted-Setup-Free Commitments

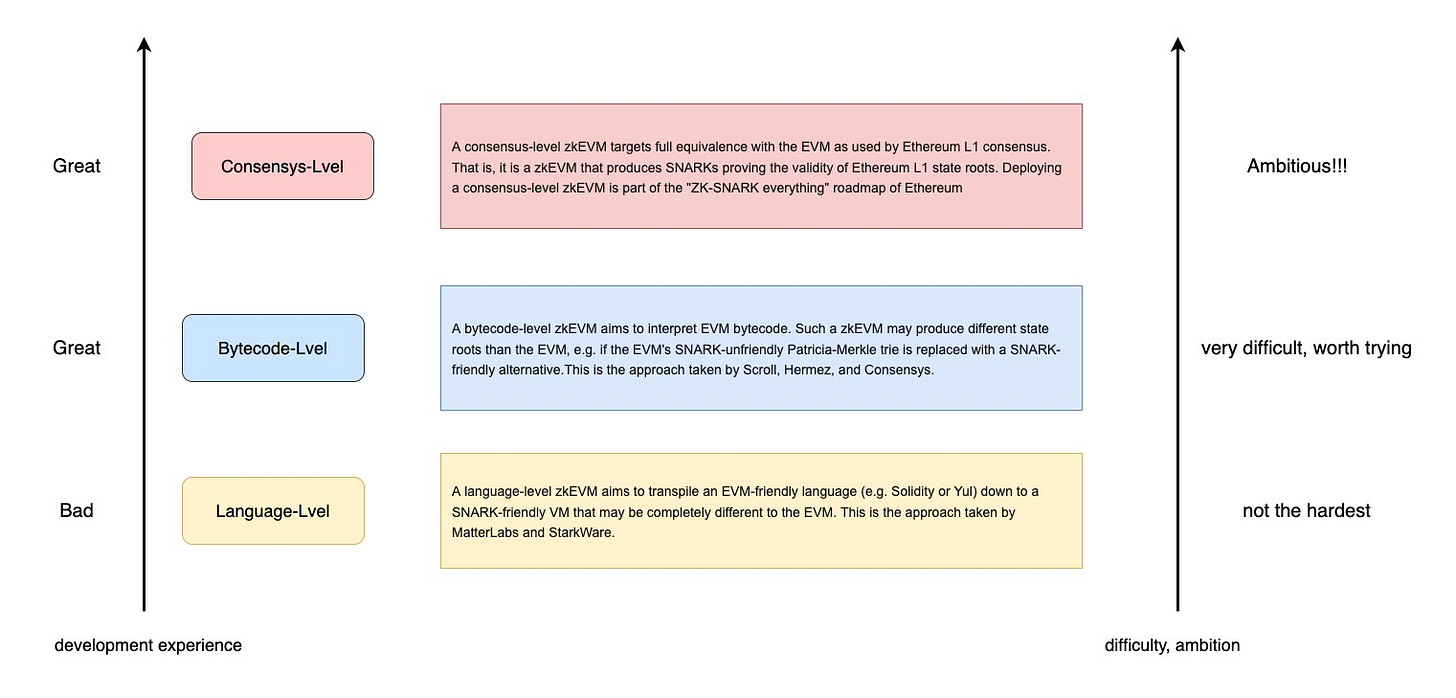

zk-EVMs

Improve Cross Rollup Standards + Interop

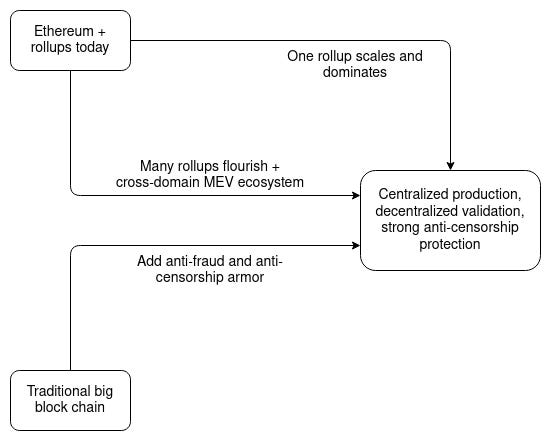

Section 3: The Scourge

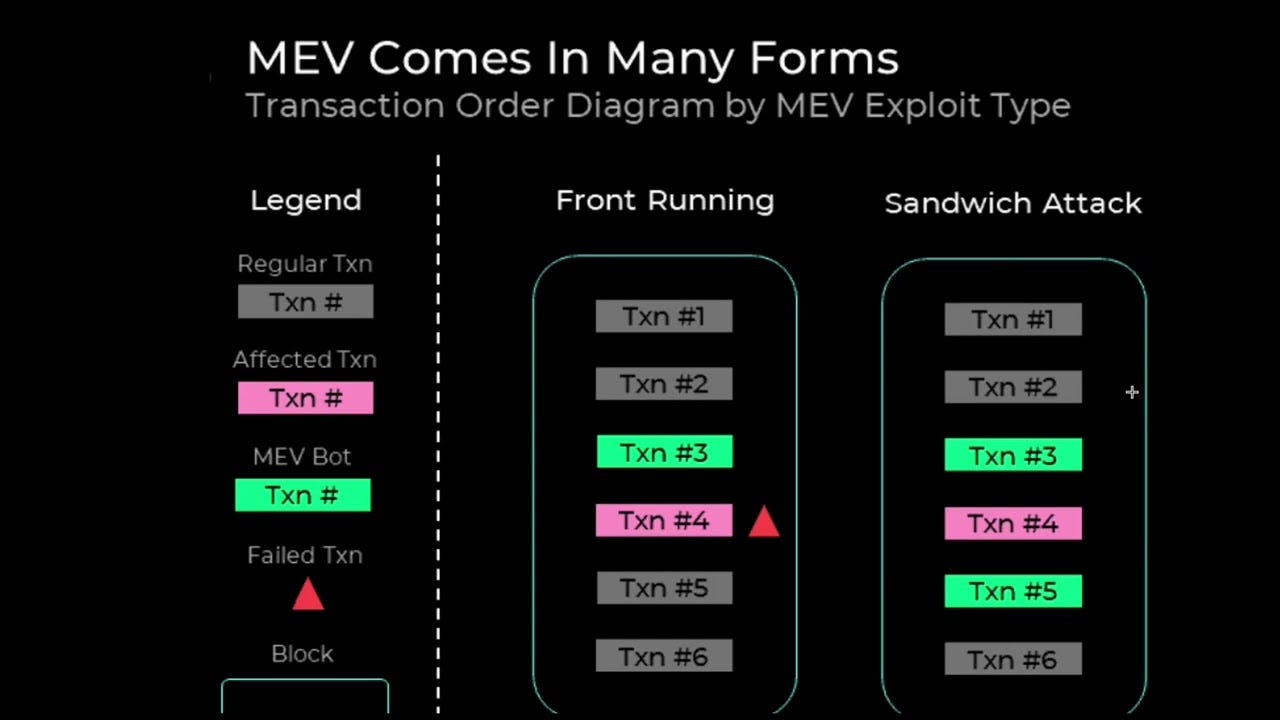

Extra-protocol MEV markets (MEV-Boost)

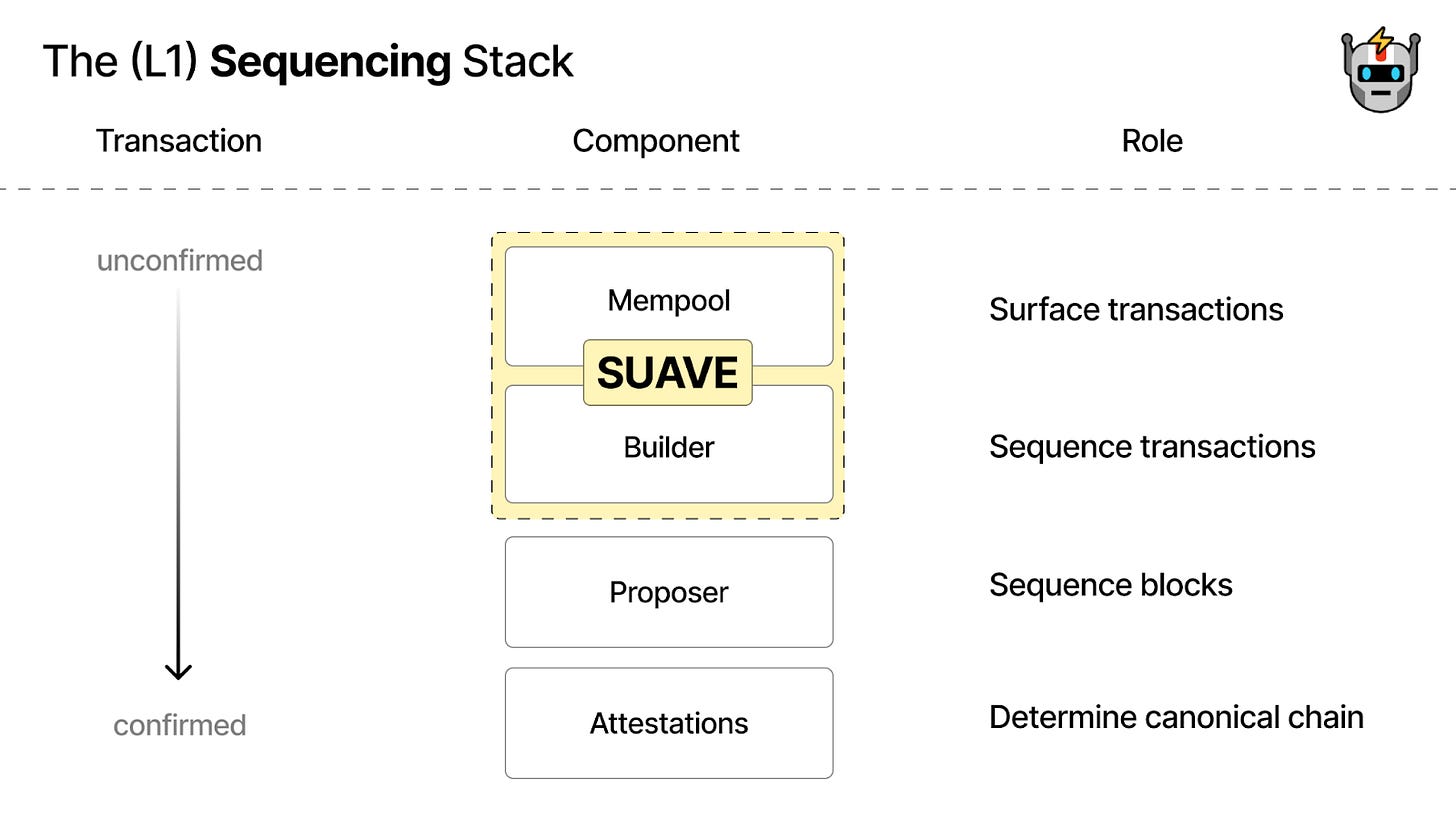

MEV Track (Endgame Block Production Pipeline):

In-protocol PBS (ePBS)

Inclusion Lists

MEV burn

Execution Tickets

Distributed Block Building

MEV-track Miscellaneous:

Tx Pre-confirmations

Application Layer MEV Minimization

Staking Economics/Experience Track:

Raise Max Effective Balance

Improve Node Operator Usability

Explore Total Stake Capping

Explore Solutions to Liquid Staking Centralization

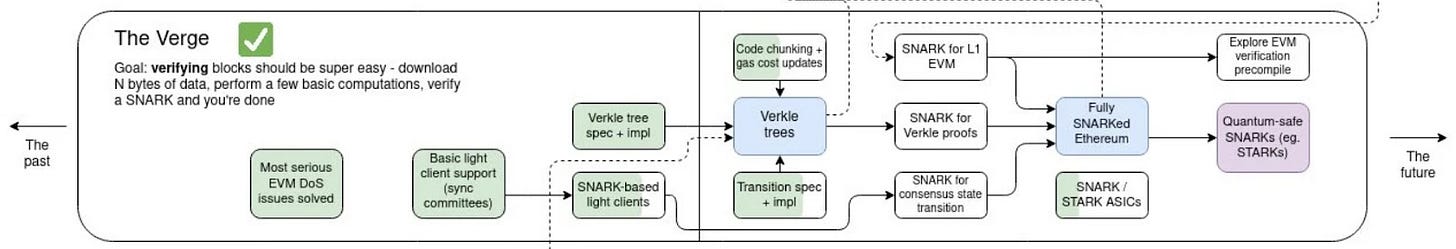

Section 4: The Verge

Most Serious EVM DoS Issues Solved

Basic Light Client Support (Sync Committees)

Verkle Tree Specification

Gas Cost Changes

Verkle Trees

Fully SNARKed Ethereum

Move to Quantum-Safe SNARKs (e.g. STARKs)

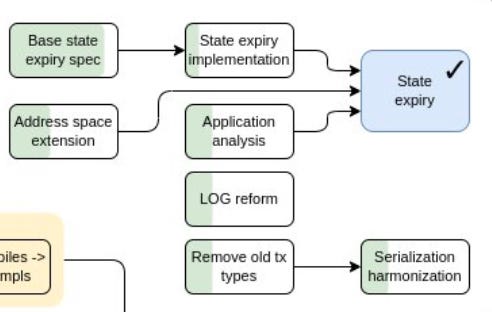

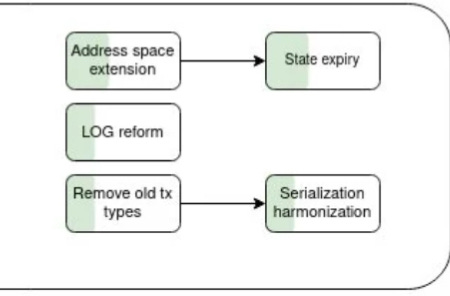

Section 5: The Purge

Eliminate Most Gas Refunds

Beacon Chain Fast Sync

EIP-4444 Specification

History Expiry (EIP-4444)

State Expiry

Statelessness

Section 6: The Splurge

EIP-1559

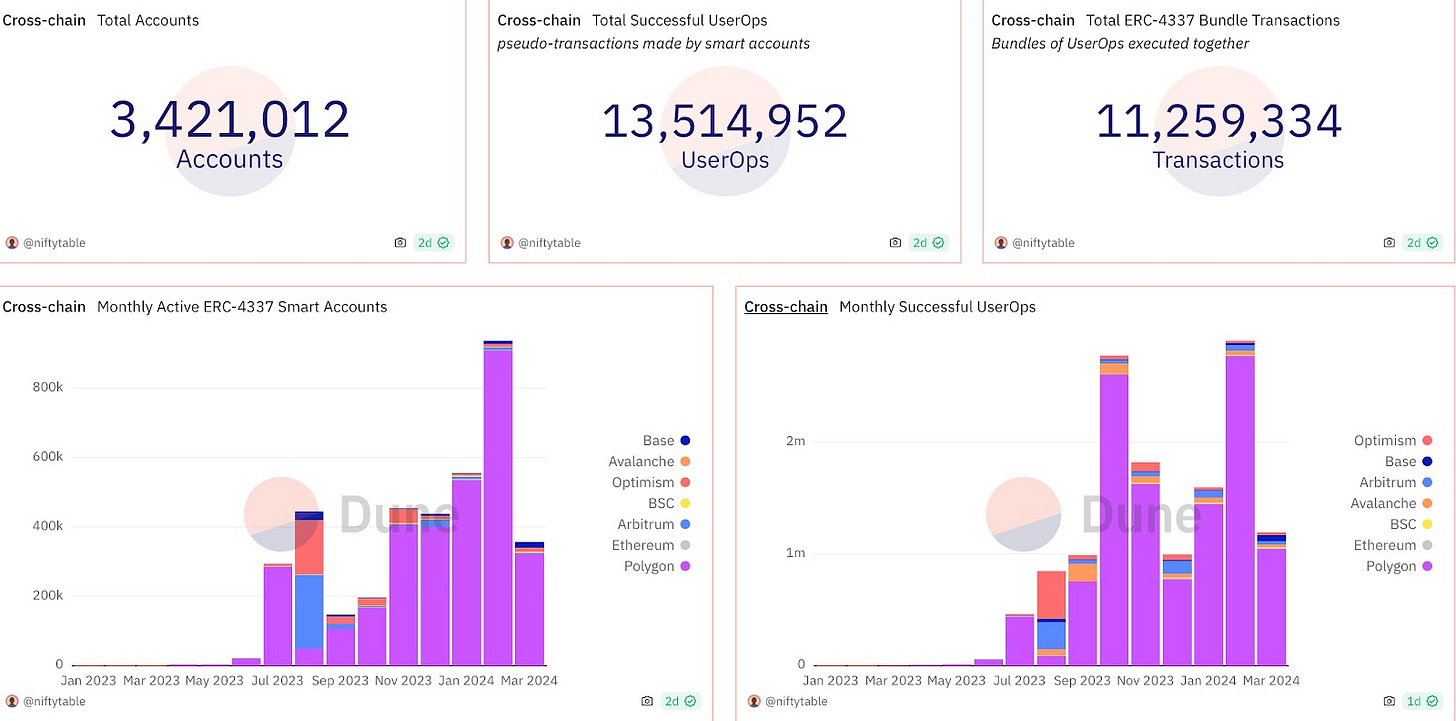

ERC-4337 Specification

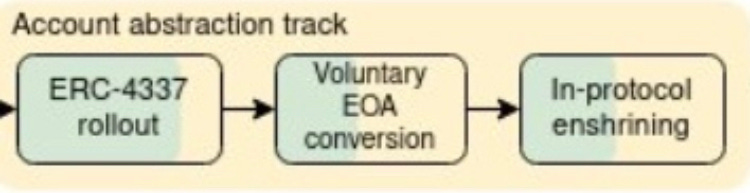

Account Abstraction

EIP-1559 Endgame

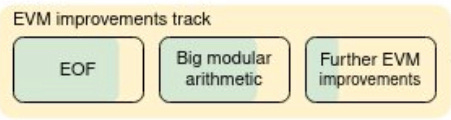

EVM Endgame

Explore Deep Cryptography

Explore Delay Encrypted Mempools

VDFs

Explore Solutions for Dust Accounts

Bonus

Prague/Electra Hardfork

Rollup Centric Roadmap (2024 Version)

Economics of ETH

Acronym Glossary

Introduction

Before we talk about Ethereum, I just want to say thanks - to all of you. The developers, the researchers, the reply guys (me), the educators, the gigabrains, the founders, et cetera et cetera. None of this was possible without any of you.

This work stands on the shoulders of giants. I didn’t come up with any of the EIPs, or the scaling plan, or any of it. What I did do was take smarter people's ideas and input, pooled it together, and (attempted) to summarize it.

For me, it’s fun to nerd out on things like this, and if you're reading this, I’m guessing you feel similar. Ethereum is an endless black hole of things to learn about, and so it’s really easy to nerd out. It’s a never ending game of learning, touching so many different sectors, like cryptography, finance, programming, game theory, economics, law, etc. On top of that, the community is filled with so many cool, smart, down to earth people, and it is something I’m proud to be a part of.

I have tried to reference all of the topics discussed with links to the original posts/research, so if I missed any, please let me know. I hope you all enjoy it, and thanks again to everyone who helped.

Getting Back on Track; Ethereum

Ethereum was conceived in 2013 by Vitalik Buterin and later developed throughout 2014 by him and other co-founders (Gavin Wood, Charles Hoskinson, Anthony Di Iorio, Joseph Lubin to name a few). Ethereum officially went live on July 30th, 2015.

Ethereum is a decentralized and open source distributed computing platform that allows for the creation of smart contracts, which in turn allows for the creation of decentralized applications. Ethereum has the ability to process general-purpose code, which in more crypto-native terms means it can process smart contracts. Smart contracts facilitate agreements from contract -> contract and/or from contract -> user, which means that different parties (contracts & users) can enforce and verify agreements between each other. This was (and is) a huge unlock for the crypto industry.

Smart contracts operate on an if-then logic, meaning that if X event occurs, then Y will follow. Unlike real world contracts, smart contracts are auto executing and programmed onto a blockchain, in this case Ethereum. Ethereum made it so applications and developers no longer had to be siloed into the limitations of certain blockchains, like Bitcoin for example, and allowed them to build on something more generalizable that can facilitate agreements via smart contracts.

With the ability to natively support smart contracts, Ethereum has ushered in hundreds of applications to come and build on top of the shared platform, and compose with other applications doing the same. The composability that Ethereum unlocks can be analogized to lego: smart contracts are like lego pieces that can mix and match with other lego pieces, and this composability is what made things like DeFi (money legos) possible.

Roadmap Overview

Vitalik and the community have released plans to improve Ethereum over the next 5-10 years and I am going to break them down. I'll start by showing you the former roadmap to serve as a reference point, and then I’ll go over the current roadmap.

The former roadmap, presented by Vitalik in 2022:

And the current roadmap:

This report is going to break down each section and each subsection of the current roadmap.

Thanks to Mattison Asher for comments.

Enjoy! Thanks for reading :)

The Merge: Full Transition to Proof of Stake

Goal: Have an ideal, simple, robust and decentralized Proof-of-Stake consensus.

Status: More than halfway complete!! I’d say around 60%.

Ethereum’s transition to Proof of Stake occurred on September 15th, 2022, with withdrawals shipping 7 months later in April. What’s left to do in this section would be to implement SSLE (single secret leader election), SSF (single-slot finality), further develop DVT, view merge, fork choice improvements, quantum safe signatures, and continue to increase the validator count.

What’s Been Implemented?

Beacon Chain Launch: The Beacon Chain launched on December 1st, 2020. It was the first step in Ethereum's transition to PoS, besides all of the initial research & development that went into PoS.

Warmup Fork (Altair): Altair was another important step in the transition to PoS. It served as a test for the Beacon Chain & its validators, ensuring they were ready for the big changes that came with the merge (like merging Ethereum mainnet with the Beacon Chain).

The Merge: The Merge went live on September 15th, 2022, and transitioned Ethereum from a Proof of Work consensus mechanism to a Proof of Stake one.

Withdrawals: Withdrawals were enabled on April 12th, 2023 as part of the Shanghai/Capella upgrade. Enabling withdrawal functionality finally allowed staked ETH to leave the consensus layer (the Beacon Chain) and go onto the execution layer to be transacted with.

What’s Left To Implement?

Distributed Validators

Single Slot Finality (SSF)

Secret Leader Election (SLE)

Other Fork-Choice Improvements

View Merge

Quantum-Safe Signatures

Increase Validator Count

The Merge

The Merge is finally complete!! Ethereum has successfully moved from Proof of Work (PoW) to Proof of Stake (PoS), with the transition going extremely smooth. Huge congratulations to the development team and the Ethereum community. The Merge is just an incredible technical feat, being one of the most complex upgrades to any cryptocurrency network ever, and was easily the most important development of 2022.

Let's dive a little deeper into Proof of Stake, and on why Ethereum decided to make the change.

What is Proof of Stake?

Proof of Stake (PoS) is a consensus mechanism used by blockchains, similar to Proof of Work (PoW).

A PoW system has participants called “miners” who use hardware and electricity to solve complex mathematical puzzles to validate and propose blocks. This works differently from a PoS system, where participants called “validators” purchase the native token of a protocol and put it up as “stake,” allowing them to then validate transactions and propose blocks.

Staking just means to lock up a certain amount of cryptocurrency as collateral to a blockchain, subjecting you to rewards and (potential) penalties. To become a validator on Ethereum, you buy the native token of the network, ETH, and put it up as stake on the blockchain, Ethereum. How much ETH you have will determine whether you can solo stake (32 ETH minimum), or use a third party service (no minimums).

When a validator on Ethereum behaves accordingly, they are rewarded by the network in three ways: protocol emissions, MEV, and inclusion fees. When a validator fails to participate (e.g. going offline), they will miss out on rewards, but when a validator misbehaves (e.g. double signing), they can be penalized, aka slashed. To give a little more context, validator misbehavior can describe a couple things, like:

Double signing

Surrounding votes

Downtime

Invalid block proposals

Unresponsiveness during finality

These behaviors are viewed as an attack and will not be tolerated by the protocol.

Carrots & Sticks

PoW systems only offer carrots (rewards) while PoS systems offer both carrots and sticks (rewards & penalties). This simple distinction has a massive impact on what can be built on top of the different mechanisms.

Benefits of Proof of Stake?

Vitalik had a great blog post covering the reasons to switch to Proof of Stake in this piece: Why Proof of Stake, where he describes 3 reasons why Proof of Stake is a superior blockchain security mechanism compared to Proof of Work.

Security: Proof of Stake offers more security for the same cost.

Attacks: Attacks are much easier to recover from in Proof of Stake.

Decentralization: Proof of Stake is more decentralized than ASICs.

Diving Deeper:

Benefit #1 - Security: “Proof of Stake offers more security for the same cost.”

Uh, okay. How? By eliminating expensive computation.

In PoW, miners need to invest in powerful hardware, such as specialized mining rigs, so they can then consume substantial amounts of electricity and solve complex mathematical puzzles. The miner that solves the puzzle first will get the block reward (6.25BTC). Buying and maintaining mining rigs requires a significant investment and is highly competitive, and so there is a very high barrier to entry to becoming a successful miner.

In PoS, the need for computational work is eliminated or significantly reduced. This is because validators are chosen based on the number of tokens they hold and are willing to stake as collateral, rather than the computational power they possess. This eliminates the need for expensive mining equipment and reduces the associated costs, making the network more secure for the same investment.

Vitalik made a great point on this topic: “Unlike ASICs, deposited coins do not depreciate, and when you’re done staking you get your coins back after a short delay. Hence, participants should be willing to pay much higher capital costs for the same quantity of rewards.”

I just want to point out that while it is true that deposited coins don’t depreciate like an ASIC will, they can still go down in value. ETH is volatile, so be mindful of this.

Now, remember, the more computation you control on a PoW system means the higher chance you have of solving the mathematical problem first, and therefore a better chance of getting the block reward. This works very similarly on PoS - the more stake you have, the higher the chance you are chosen to propose the next block and receive the block reward.

Benefit #2 - Attacks: “Attacks are much easier to recover from in Proof of Stake.”

Let’s go over a few types of attacks:

Spawn Camping Attack: A spawn camping attack is when an attacker exploits a vulnerability in a blockchain, which is then fixed by the blockchain by deploying a countermeasure. The countermeasure is successful and removes the attackers initial advantage, though it doesn’t matter. The attacker was prepared to incur the initial cost, and follow it up with continued attacks that require more fixes. This allows the attacker to continuously be at an advantage, similar to how a player can “spawn trap” other players in games like Call of Duty.

The 51% Attack: The 51% attack refers to a blockchain attack where a single entity acquires control of more than 51% of the protocol. This could mean controlling 51% of the hash rate in a PoW system, or controlling 51% of the stake in a PoS system. Having this level of control over a system gives the attacker the ability to exploit the network and potentially destroy it in the process. Specifically, it allows the exploiters to take control of future block propagation and change transactions in current/future blocks. Furthermore, any transactions completed while the attacker(s) is in control of the chain would be able to be reverted by the attacker(s). Reverting transactions would allow the attacker(s) to spend those same coins again, called a double-spend attack. In other words, double spending means the same unit of cryptocurrency can be used more than once, and can be detrimental to a blockchain.

These are some of the attacks that can happen to blockchains. Let’s talk about why PoS systems can handle them better than PoW systems:

Slashing Mechanism: Proof of Stake introduces a slashing mechanism where validators' staked tokens can either be partially or fully destroyed as a slashing penalty for misbehavior or malicious activity. When a validator tries to attack the network or acts against consensus rules, their stake (and not anyone else's) can be slashed. Slashing penalties present a huge incentive to act accordingly, as validators risk losing a partial or full amount of their stake when misbehaving. There is no equivalent to slashing in PoW systems as they can only offer rewards, unlike PoS which can offer rewards and penalties. Here’s a Vlad Zamfir quote on slashing and Proof of Work: “It would be like if the ASIC farm burned down when it mined an invalid block.”

Majority Stake: Proof of Stake systems select the next blocks validator based on their token holdings (ETH staked), and the more ETH staked, the higher chance you get selected to validate the next block. This works similarly in PoW, where computational power determines mining rewards. Because of this fact, attackers in either system look to acquire as much computation or stake as possible to then have control over the network. Although maybe counterintuitive, successfully acquiring a majority stake in a PoS system is much harder than acquiring a majority of computation in an ASIC dominated PoW system.

Why is That?

Let’s say there is an attack on an ASIC based system and the attacker is trying to control the majority of computation by acquiring all the ASICs. The system can respond to the initial attack by hard-forking to change the PoW algorithm, which will render all ASICs useless (both honest & attacking miners). This is a step in the right direction, however, if the attacker can accept the blow of the initial cost related to the ASICs, he/she can potentially continue the attack. This is because the chain won’t have enough time to develop and distribute new ASICs for the updated algorithm, and will have to revert to using GPUs. Because GPUs are so cheap relative to ASICs, the attacker can (potentially) continue the attack by buying up all of the GPUs and acquiring a majority of computation. Also, it’s important to note that there are services that lease out GPUs and ASICs, which could enable this attack to occur more easily.

The Same Attack on Ethereum (PoS):

Acquiring the majority stake means you have to buy a majority of the ETH that is staked, which is a lot (as of writing, 26% of ETH is staked, which is 31M ETH or $123B!). Buying $123B of ETH is basically impossible to do at once, and even if it was possible, the price would rise dramatically. The contrast to Bitcoin is noticeable here: attacking Bitcoin gets cheaper the longer the attack goes on as the chain has to switch from using ASICs to GPUs, whereas attacking Ethereum gets more expensive the longer it goes on as the attacker is constantly forced to buy more of the same token. Ethereum also has the ability to respond to the attacker by slashing their coins, ultimately forcing them to buy more. The more the attacker tries to buy, the more they will push the price higher. It’s impossible to amass such a large stake without significantly impacting the market price, and at some point the attacker will run out of money.

In Vitalik's words: “The game is very asymmetric, and not in the attackers favour.”

Benefit #3 - Decentralization: “Proof of Stake is more decentralized than ASICs.”

Staking is much easier to profitably participate in when compared to becoming a profitable miner. Bitcoin mining is dominated by ASICs, and setting up an ASIC farm to mine BTC will cost you millions of dollars. Solo staking isn’t exactly cheap (32 ETH), but it is cheaper than setting up a successful ASIC farm.

On Ethereum, it’s possible to become a solo staker if you meet the requirements: 32 ETH and some hardware. The Ethereum community encourages people who can solo stake to do so as it massively improves the decentralization properties of the network. It’s great that solo staking is an encouraged option for the Ethereum community, the problem is that it is just too expensive and/or too technically intimidating for the average Joe.

Enter: Staking Providers

You can think of staking providers as the equivalent to Bitcoin mining pools. They do a couple things:

Allow individuals or entities to stake with less than 32 ETH.

Maintain the necessary staking infrastructure: servers, network connectivity, etc.

Assist in setting up validator nodes, configuring necessary software, and meeting the Ethereum networks requirements.

Monitor the nodes, perform any updates, and ensure high uptime.

Offer tools to users like staking dashboards.

Distribute rewards to users.

Unlock capital efficiency.

Aside from allowing you to stake with less than 32 ETH, I think the biggest unlock as a user is the capital efficiency. Staking providers equip you with a liquidity token which represents your staked ETH and is backed 1:1. This token can then be used across the Ethereum ecosystem, instead of sitting idly in a staking contract. Common liquid staking tokens include Lido’s stETH or RocketPool’s rETH. Staking pools seem best suited for most users as 32 ETH is hard to come by ($127,520) and maintaining the technical aspects of staking is difficult.

It’s important to note that centralization issues can arise with staking providers just like how they do in mining pools. As of writing, the most dominant staking provider is Lido with 32% market share, which could become a serious problem if this share keeps growing (some people think that it already is a problem at 32%, read more about the discussion here). It’s important to note that Lido isn’t 1 single validator, as Lido outsources to several service providers, totaling to around 37 trusted parties).

Here’s a view of Ethereum’s staking services and their market shares:

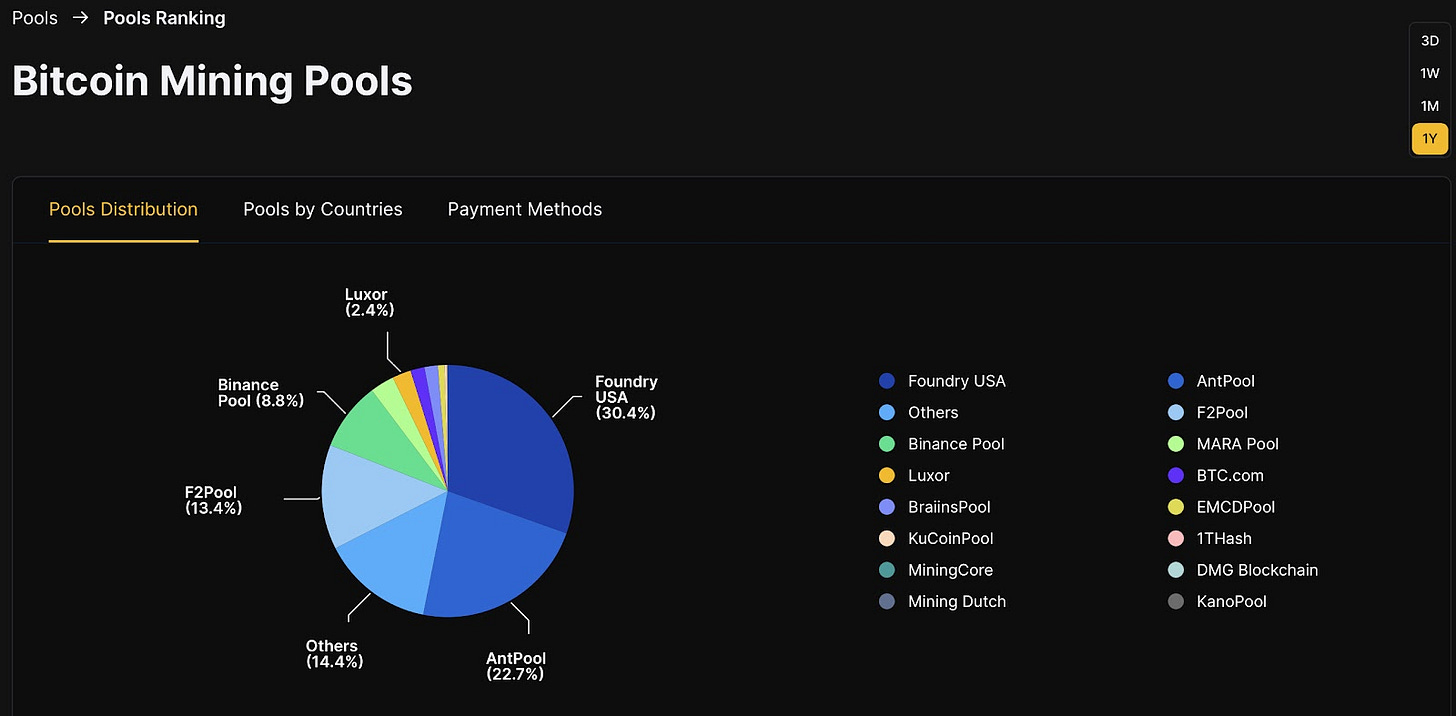

And a view of Bitcoins mining pools & their market shares:

What are the Problems with Large LSTs?

An LST that has above 33% market share can disrupt finality.

An LST that has above 50% market share can censor the network.

An LST that has above 66% market share can achieve finality.

These are the problems with LSTs gaining larger and larger market share. Some members of the community want Lido to self limit growth, while others are neutral. Lido voted in favour of not self-limiting a while back, while other providers like Rocketpool and Stader have voted in favour of self limiting (specifically, RP voted to self limit at 33%, Stader voted to at 22%).

This is the best discussion/debate I’ve seen over LSTs and their relationship with Ethereum, specifically Lido and its market share. Check the replies.

All in all, securing a PoW system is harder to do as it has higher barriers to entry, whereas staking in a PoS system (whether your solo staking or in a staking pool) is more accessible and open to the average Joe.

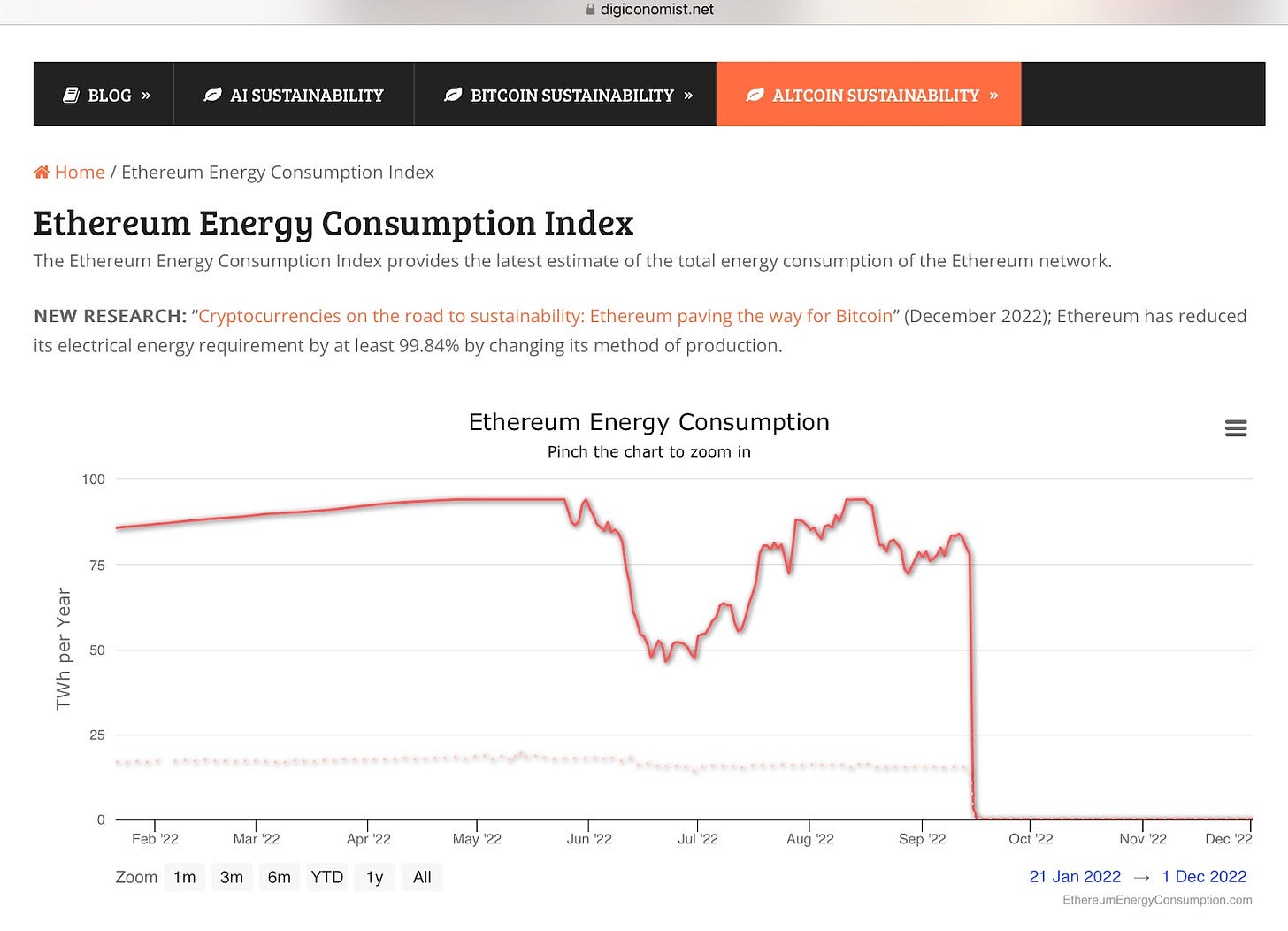

Benefit #4 - Energy Expenditure: “The Merge has had huge implications on Ethereum’s energy expenditure and its carbon footprint.”

Some numbers:

Energy expenditure: reduced 99.988%

Carbon footprint: reduced by 99.992%

PoW electricity consumption: 22,900,320 MWh/yr

PoS electricity consumption: 2,600.86 MWh/yr

The numbers are just incredible. This report from CCRI goes really deep into the Merge’s impact on Ethereum's energy consumption.

Look at the drop off in this chart!

& here are the consumption numbers over the past 3 months:

Ethereum’s energy consumption went from averaging above 75 TWh/year to below .01 TWh/year!

Whether you believe PoW is a waste of energy or not, lots of other people do, and it’s generally a headwind from a narrative perspective. ESG is only becoming more entrenched on Wall St. and in the mainstream media, and it seems like the only letter that matters to them is E (environment). Mainstream media, Wall Street, and the like do NOT appreciate things they consider harmful to the environment, period. I think it's a big deal that Ethereum won't have to defend itself from the ESG opposition targeted at PoW blockchains, and can be viewed as a green investment to institutions and other big players.

“It is easier to change the narrative than to win the war.” - DegenSpartan, probably.

We talked about the benefits, now let’s talk about some drawbacks.

Drawbacks to Proof of Stake

Less Established History

Harder to Implement

More Complex

Higher Setup Cost

Drawback #1 - Less Established History: “Proof of Stake is less battle tested compared to Proof of Work.”

The statement has some truth to it. PoW has been the dominant consensus mechanism throughout the life of the crypto industry (though its relevance is declining). In the early -> middle years of crypto (I’ll say 2009-2016), basically every single new protocol adopted Proof of Work as their consensus mechanism, or piggy-backed (merge-mined) off of another Proof of Work protocol.

Ethereum was no different, and was the second biggest PoW chain for much of its lifetime behind Bitcoin, before making the transition to Proof of Stake in 2022. So much activity and research lived on Proof of Work systems before Proof of Stake systems were even conceived, let alone implemented, and so the argument is that PoW is more battle tested then PoS.

Counter-Argument

Although the argument somewhat still holds, the “battle-tested” point in favor of PoW has become weaker over the years. PoS is used by the second largest protocol, Ethereum, along with a lot of other protocols, like Cardano, Solana, Avalanche, Tron, Polkadot, Polygon, Cosmos, Algorand, Mina, etc. A large portion of crypto activity happens on PoS chains, not PoW ones (although PoW chains make up a bigger market capitalization than PoS chains). When I say activity, I mean DeFi (swaps, borrow/lend, stables) NFTs, payments, DAO creation, research, etc etc.

So, I think it's naive to blanketly say “PoW is more battle tested than PoS.”

Now, is PoW more battle tested because:

It was the first consensus mechanism used by crypto protocols and has survived the longest?

It has the most research behind it and has had the longest time in the market?

Yeah, point taken.

But, is PoW more battle tested in terms of hosting applications and the user activity on top?

Can it provide the necessary infrastructure to build the applications and support that activity?

No, not even close.

All of this usage brings feedback, and things are improved accordingly. The infrastructure (L2s, oracles, bridges, wallets, etc) that support this activity are much more mature and more abundant on PoS chains compared to PoW ones. Take Bitcoin for example - the infrastructure just isn’t there.

And although not there yet, the infrastructure could be coming. Ordinals recently hit the scene and have sparked discussion around the infrastructure on Bitcoin and what more needs to be done to host a successful Bitcoin economy in the future. I think it’s great that there is a discussion about Bitcoins (lack of) infrastructure, this just wasn’t happening in previous years.

Also, I think the fact that there is now serious discussion around improving Bitcoins infrastructure highlights an important culture change that’s been ongoing between Ethereum and Bitcoin:

“Ethereum used to be downstream of Bitcoin culture. Now, it’s the other way around.” - David Hoffman.

Drawback #2 - Harder to Implement: “Proof of Stake will never actually be implemented on Ethereum successfully.”

Implementing a PoS mechanism is much more complex than implementing a PoW one, especially when considering that Ethereum was doing a swap of the two systems on a live protocol. Ethereum was basically trying to change the engine of the plane while it was still flying, and so people heavily doubted the upgrade would ever go live.

After many missed timelines and a wait longer than expected, the merge finally came. On September 15th, 2022 Ethereum successfully implemented PoS - and impressively, it didn't skip a beat. Basically nothing went wrong, which is just an incredible technical feat for an upgrade of this size.

Next.

Drawback #3 - More Complex: “PoS is more complex to run than PoW.”

This is true. Validators on Ethereum need to run 3 pieces of software now, compared to only 1 piece previously on PoW. These include an execution node, the beacon node, and a validator client. Running multiple instances of software is more complex than running one piece of software, so no argument here. Proof of Work is also an objectively less complex consensus mechanism than Proof of Stake.

Drawback #4 - Higher Setup Cost: “It’s cheaper to become a miner on PoW than to become a staker on PoS.”

True, PoW has the advantage in the sense that you can become a miner with a balance of 0, whereas with PoS you need to actually have an ETH balance to become a staker. Though I find this to be a weak point - you have to buy mining equipment to become a miner. It’s not free.

Withdrawals

Staking withdrawals have been shipped! Shapella (the Shanghai + Capella upgrade) went live on April 12th, and enabled withdrawals. Withdrawal functionality was the cornerstone of the Shapella upgrade and a main priority for Ethereum developers once the merge was complete. Shoutout to the devs as they did an excellent job.

What is the Shanghai/Capella Upgrade?

The Shapella (Shanghai + Capella) upgrade went live on April 12th, 2023. The upgrade enabled withdrawals, which was the final puzzle piece to place on the board of Proof of Stake.

Distributed Validators (DVT)

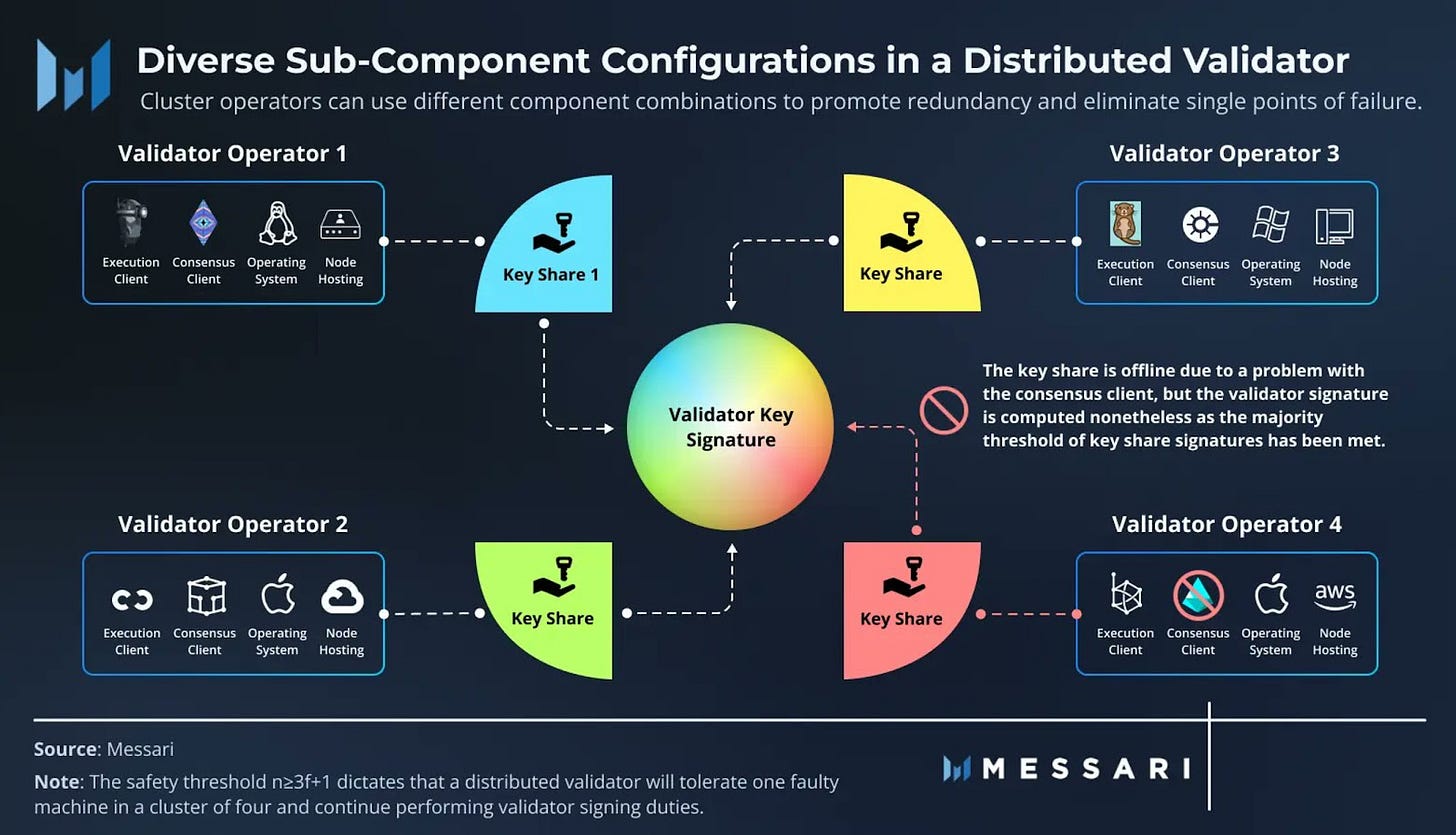

Distributed Validator Technology (DVT) allows the current duties of an Ethereum validator to be separated among several nodes, who can then all act as one validator together.

Motivation

Distributing the responsibilities of validators can help reduce some of the issues Ethereum validators of today can experience, like downtime, client bugs, hardware failures, config issues, connectivity issues, and more. In a DVT world, if a node fails to do their job, such as not staying online, the connected validator will not be affected and can continue on its course of operations. At its core, DVT is about removing single points of failure for validators, which will improve the robustness of Ethereum’s security.

Mental Models

DVT is like a multisig but for staking.

Risks to Staking

Here are the current risks to staking that Ethereum wants to eliminate:

Risk #1 - Key Theft: Institutions trusting one employee to handle the validator key, or individuals trusting institutions to handle the validator key.

Solution: Split a validator key into smaller keys and distribute it (Shamir Secret Sharing). You then recombine signatures from the smaller keys to produce a single signature (Threshold Signature Scheme).

Risk #2 - Node Failure: Connectivity issues, software bugs, hardware failures, config issues.

Solution: DVT uses redundancy to protect from node failure; operate the same node in multiple places. Now, although this helps, it creates another issue: if two nodes are not in the same state, they can vote for two different chains accidentally, resulting in slashing. To solve this issue, we need to reach consensus among these nodes. By achieving consensus between these different nodes you allow for them to vote on the same state and avoid slashing penalties. We will get to consensus later.

How Does DVT Work?

To make DVT work we need to use a few different primitives:

Distributed Key Generation (DKG): Distributed key generation is the process in which key shares are generated and split up amongst a set of validator nodes. Each node will only hold a portion of the validator key, which is opposite of the current architecture where one validator holds the entire key.

Shamir Secret Sharing: Shamir secret sharing allows for the individual key shares to be combined into 1 key. In a DV cluster, each validator has a key share, which can also be thought of as a signature. Using Shamir secret sharing, all of the different signatures (aka key shares) can be combined into 1 final signature, which yields the validator signing key (the private key).

Threshold Signature Scheme (TSS): The threshold signature scheme decides how many different signatures (key shares) are needed to sign something. Reaching the required amount of key shares, aka the threshold, will differ for different clusters of nodes. Some might require 3 out of 4, some might require 4 out of 6 - it all depends.

Multi-Party Computation (MPC): MPC allows multiple different parties to combine their own unique private data (in this case key shares) and secretly reconstruct the private key, without revealing their own data in the process. This setup makes it impossible for any 1 validator to generate the private key, as the validators only have access to their personal key share and no one else's.

Consensus: And finally, we need to reach consensus among these nodes. To do this, a block proposer is selected. Their job is to propose a block and share it with the other nodes in the cluster. The other nodes then add their key shares to the aggregated signature. Once the threshold of signatures is reached, the block is proposed on Ethereum.

Benefits of DVT

Lowers risk of slashing

Eliminates key theft risk

Reduces node failure (connectivity issues, hardware/software bugs, config issues)

Lowers the barriers to entry for solo stakers (don’t need 32 ETH)

Projects Implementing DVT

SSV Network: SSV Network is building an implementation of DVT. SSV’s mainnet went live in September 2023, and so far they have amassed 100+ operators, 2000+ validators, and 70,000+ETH ($278M).

Obol: Obol is also building an implementation of DVT. They recently went live on December 1st. Lido recently announced they will be using Obol (& SSV) to provide DVT to their current set of validators. Here are some stats from the SSV testnet: Lido

Stader Labs: Stader will be adopting DVT technology into the DVT stake pool - one of three pools soon to be offered by them (permissioned and permissionless being the other 2 pools.) The permissionless pool has a bonding requirement of 4 ETH, which is the lowest in the industry. Stader will continue to drop the bonding requirements by at least 50% when the DVT pool goes live, which is currently on testnet with SSV. Stader

Diva Labs: Diva is a liquid staking provider that is fully powered by DVT. Diva stands for distributed validation - aka squad staking, which allows users with less than 32 ETH to pool their assets together. Research is showing that diva operators will only need 1 ETH to run a validator. Diva Labs

ClayStack: A DVT based Ethereum LST. Claystack

Future of DVT

Although there already are live DVT implementations (Obol, SSV), and DVs have been marked as complete on the roadmap, there is still a ton of work to be done. Oisin Kyne (co-founder & CTO of Obol) released a fantastic blog post covering his view on the future of distributed validators, and a roadmap on how to get there.

One-Shot Signatures

One-shot signatures could play a role in the future of DVT.

One-shot signatures are special cryptographic signatures where a private key can only sign one message. The private key cannot be copied and must be destroyed to produce a signature. Despite their quantum basis, the signatures themselves are just bits and bytes, which do not require quantum communication.

Benefits of One-Shot Signatures

Enhanced privacy

Enhanced security

Mitigation of key reuse issues

Lower complexity

Quantum resistant (one shot signature schemes based on hash functions are quantum resistant)

Justin Drake had a great response related to one-shot signatures when asked about the future of DVTs.

Question:

“Is secret shared validator, or DVT a must for Ethereum?”

Justin Drake (bobthesponge1 on reddit) response:

“I've partially changed my mind on this! In the long term, thanks to one-shot signatures, the importance of operators (and therefore the importance of distributing the control of operators) will dramatically go down. The reason is that operators won't be able to slash stakers and trustless delegation will be possible. One-shot signatures won't be ready for decades and in the meantime DVT is a useful mechanism to remove the need to trust a particular operator (e.g. in the context of staking delegation, liquid staking, and restaking).”

Justin also talked about what one shot signatures can unlock:

slashing removal: The double vote and surround vote slashing conditions can be removed.

perfect finality: Instead of relying on economic finality we can have perfect finality that is guaranteed by cryptography and the laws of physics.

51% finality threshold: The threshold for finality can be reduced from 66% to 51%.

instant queue clearing: The activation and exit queues can be instantly cleared whenever finality is reached without inactivity leaking (the default case).

weak subjectivity: Weak subjectivity no longer applies, at least in the default case where finality is reached without the need for inactivity leaking.

trustless liquid staking: It becomes possible to build staking delegation protocols like Lido or RocketPool that don't require trusted or bonded operators.

restaking-free PoS: It becomes possible to design PoS where restaking is neutered.

routing-free Lightning: One can design a version of the Lightning network without any of the routing and liquidity issues.

proof of location: One can design proof-of-geographical-location which use network latencies to triangulate the position of an entity, without the possibility for that entity to cheat by having multiple copies of the private key.

Read more about one shot signatures: Reddit AMA

Closing Thoughts

Staking centralization is a hot topic in crypto (and for good reason). A core tenet of Ethereum is decentralization, and so using technology like DVT to distribute and therefore decentralize the validator set is a big plus for the ecosystem as a whole. Until things like one shot signatures are ready, DVT will serve as an excellent mechanism to reduce the need to trust operators and mitigate the risks involved with that trust.

Single Slot Finality (SSF)

Finality refers to the point in time when a block is fully settled on a blockchain. The SSF upgrade looks to speed up the time it takes to finalize blocks on Ethereum.

Motivation

Ethereum finalizes every 2 epochs (or every 64 slots). Each slot is 12 seconds, and so finalization takes around 12.8 minutes, which is too long. Single slot finality would change this setup so a block is finalized every slot (hence the name).

Finality refers to the point in time when a block is fully settled on a blockchain. Once a block is considered final, it is set in stone and cannot be reversed. That is unless 33% or more of the total ETH staked was compromised. Attackers with over 33% control of staked ETH can stop the chain from finalizing. “If 1/3 or more of the staked ether is maliciously attesting or failing to attest, then a 2/3 supermajority cannot exist and the chain cannot finalize.”

What’s a Slot?

A slot is an opportunity for a block.

Ethereum PoS contains slots and epochs. Each slot (12 seconds) represents a fixed time period within an epoch (approximately 6.4 minutes), meaning there are 32 slots per epoch. At the beginning of each epoch, the entire validator set is randomized into 32 committees, with 1 committee per slot. Each committee votes on the block in their slot, with each validator voting once per epoch. In the following epoch, we double check that everyone has received the votes from the previous epoch. So the voting happens in the first epoch, and the second epoch is to double check everyone received the votes. After two epochs, finalization has been achieved. This is how Ethereum works today.

SSF changes this framework by introducing the notion of finality on a per-slot basis. At the end of each slot, a block proposer is selected to propose a block for that slot. Once the block is successfully proposed and included onchain, finality is achieved.

By guaranteeing that each slot only has one block proposer, and by finalizing the block proposed within that slot, SSF significantly reduces the chance of reorgs. Since only one block is chosen for each slot, there is no competition between multiple branches or forks for the same time period. This means that blocks will not be competing with each other for the same slot (which is the #1 cause of reorgs), and because of that the chance of reorganizations drastically decreases. Reducing reorgs means a more stable and consistent blockchain history and is what we want to achieve.

What’s a Reorg?

A blockchain reorganization (reorg) is what happens in blockchain networks when the existing consensus on a chain is disrupted and the chain of blocks is rearranged. Reorgs can happen for several reasons, such as:

A blockchain produces different blocks at the same time (most common)

Client bugs

Interaction bugs

Malicious attacks

Reorgs result in forks, where there are now two chains. A group of validators known as attesters will vote on the head of the canonical chain, and the block with the most attestations (the most ‘weight’) is the winner.

Single slot finality would take the current 12.8 minute finality time and reduce it to 12 seconds (12 seconds is equal to a single slot on Ethereum today, ergo single slot finality). This would be a massive reduction in time to finality and have several positive implications.

Benefits of SSF

Improved UX: Instead of waiting 12 minutes for your transaction to finalize in a block, your transaction could be finalized in 12 seconds. This is an order of magnitude improvement, and would result in a better UX for everyone. Makes you wonder what could be built on the protocol when finality is 12 seconds and not 12 minutes.

Eliminate Multi-Block Reorgs: Multi block MEV re-orgs can be eliminated as it only takes 1 block for the chain to finalize instead of multiple.

Reduced Bugs/Complexity: LMD-GHOST & Casper-FFG have to interact with each other to operate, which can lead to attacks (balancing attacks, withholding and savings attacks). The complexity that arises from this interaction means that patches are 1.) tough to identify and 2.) tough to fix, with new problems still being discovered. The fork choice rule (in this case, LMD-GHOST) determines which chain is the correct one when there are multiple options. This could be because a reorg occurred, and the fork choice rule has to pick which of the chains is the correct one. With regards to SSF, finality occurs in the same slot as the block proposed - this means there is only 1 block for the fork choice rule to consider. Because there is only 1 block for the fork choice rule to consider, you can either have a fork choice or a finality gadget running. The finality gadget would finalize blocks where 2/3 of validators were online and attesting honestly. If a block is not able to exceed the 2/3 threshold, the fork choice rule would kick in to determine which chain to follow.

Relevant Research/Proposals

I’m going to break-down two posts: paths toward SSF & 8192 signatures per slot post-SSF. Lets start with paths toward SSF:

Paths toward SSF from Vitalik is the OG paper on the topic. The paper doesn’t go over a specific design, but instead asks and (tries to) answer a lot of questions surrounding SSF.

Vitalik starts out with three open questions:

What will be the exact consensus algorithm?

What will be the aggregation strategy (for signatures)?

What will be the design for validator economics?

1.) Developing the Exact Consensus Algorithm

Ethereum wants to implement something that mirrors the Gasper style. This means that under extreme conditions the optimistic chain can revert, but the finalized chain can never revert. As it works today on Ethereum, both the fork choice (LMD-GHOST) and the finality gadget (Casper FFG) are running, though following an SSF implementation, there could be a fork choice or a finality gadget running. This means the finality gadget would finalize blocks where 2/3 of validators were online and attesting honestly, and if a block is not able to exceed the 2/3 threshold, the fork choice rule would kick in to determine which chain to follow. Consensus algorithms to accommodate SSF are in progress, although nothing formal has been released.

2.) Determining the Optimal Aggregation Strategy

To achieve SSF, each validator has to vote on each slot, and with 500k validators voting every 12 seconds, problems like congestion can and will occur. Each vote comes with a signature, and with 500k validators, that’s 500k signatures every twelve seconds!

Enter: signature aggregation. It is an obvious solution, and lucky for us, the efficiency of BLS signatures have gotten incredible throughout the past three years (BLS is the current signature scheme used by Ethereum validators). Important to note that aggregating signatures needs to factor in the level of node overhead the community is willing to accept.

Although its a step in the right direction, aggregating BLS signatures will not be enough to support all the validators participating in SSF. As Vitalik notes in his report paths toward single slot finality, Ethereum has to make one of two sacrifices:

Option 1: Increase validators per committee or the committee count: Ethereum can use 1 or both of these strategies to accommodate a higher validator count.

Option 2: Introduce another layer of aggregation: Take all the signatures and aggregate them into an initial group, and then continue recursively aggregating up the stack, for a total of three layers. Each aggregation step builds on the previous layer's aggregated group, further consolidating signatures and improving efficiency.

Option 1: Results in a much higher load on the peer-to-peer (p2p) network. Meaning that more data needs to be transmitted and processed across the network, resulting in worse congestion.

Option 2: The trade off here is mainly around latency. Aggregating signatures at multiple layers requires additional steps, which can increase latency. This option also leads to a higher count of p2p subnets, and more p2p subnets means a larger attack surface. Also, preserving the integrity of the view-merge process becomes more complex as it needs to protect against potential malicious actors at each level of aggregation.

Signature Aggregation Proposals

Work is still ongoing to determine the optimal aggregation strategy.

3.) Determining the Validator Economics

Despite all the potential improvements around signature aggregation, problems can and will arise if more validators want to participate in the validation process than the network can handle - and in this scenario, how do we decide who gets to participate and who does not? Solutions to this question can result in important trade offs for the staking ecosystem that are viewed as guarantees today.

Some Positives

The adoption of single-slot finality eliminates the need for fixed-size committees and the 32 ETH validator balance cap. Consolidating validator slots previously owned by wealthy users into a smaller number of slots becomes feasible. Zipf's law accurately approximates the distribution of stakers, indicating that removing the balance cap would reduce the number of validator slots to be processed to 65,536. Increasing the cap to 2,048 ETH would only add approximately 1,000-2,000 validators, requiring a modest improvement in aggregation technology or increased load to handle single-slot finality effectively. This consolidation promotes fairness for small stakers who can stake their entire balance, and with rewards being automatically reinvested, allows them to benefit from compounding. However, exceptional cases require special considerations, such as:

Non-Zipfian distributions

Wealthy validators opting out of consolidation

A significant increase in staked ETH

Vitalik had 2 suggestions in regards to these cases:

Suggestion #1: Super Committees

A super committee of a few tens of thousands validators are randomly selected to participate within a round of consensus instead of all validators. This means that each Casper FFG round could happen in a single slot. Every slot, the committee will change, either partially or fully. The size of the committee is determined based on the desired level of security against a 51% attack. Obviously, the cost of the attack should be more than the potential revenue gained. A few factors go into the cost:

What % of ETH should be burned during recovery events?

What should the cost per second on reorg attacks be?

What should be the one time cost of 51% attacking Ethereum?

How long should a 51% attack with stolen funds carry out?

Drawbacks to Super Committees

Lowers cost of attack.

Develop hundreds of lines of specification code to select and rotate committees.

Wealthy validators distributing their ETH among multiple slots means we lose some of the benefit of raising the 32 ETH cap.

In high MEV environments, committees can delay finality to continue collecting fees.

Suggestion #2(a): Capping the Validator Set

To address excessive validator participation, put a cap proportional to the number of validators itself.

A second approach would target a floating minimum balance: if the validator count exceeds the cap, kick the lowest balance validator out. However, this could result in the griefing attack, where wealthy validators split up their stake to push out small validators and increase their rewards (less validators = higher staking yield).

Suggestion #2(b): Capping the ETH Deposited

Another way to address excessive validator participation is to put a cap on staking rewards, with rewards decreasing as the active validator set increases. You might be thinking, isn’t that already the case - the more ETH staked the lower the rewards? Yes, that’s correct. But the current reward curve will be modified even more and decrease faster as the total ETH deposited grows, eventually dropping below 0% at 25 million ETH. Around 33.5 million ETH is the reward curves limit, preventing the validator set from growing beyond that. However, despite rewards dropping to or below zero, validators may still be incentivized to participate in scenarios with high MEV.

Strengths

Avoids queueing dynamics by ensuring an equilibrium among the validator set.

Drawbacks

Discouragement attacks driving out other validators, although costly.

It's possible that most validators become “marginal” which gives an advantage to large validators over small ones.

8192 Signatures Post SSF

Sticking to 8192 signatures per slot post-SSF: how and why is a newer piece of research from Vitalik regarding approaches to SSF.

TLDR: Post SSF, Ethereum should maintain a maximum of 8192 signatures per slot. This massively simplifies technical implementation. The load of the chain is no longer an unknown #, and so clients, stakers, users, etc all have an easier job as they can work off of the 8192 assumption. The 8192 number can be raised through hard forks if needed/wanted.

In the post, Vitalik talked about three viable approaches that could bring us to SSF. Let’s go over them:

Approach 1: Go All-In on Decentralized Staking Pools

Key Idea: Abandon small-scale solo staking (individuals staking on their own) and focus entirely on decentralized stake pools using Distributed Validator Technology (DVT).

Changes: Increase the minimum deposit size to 4096 ETH, cap the total validators at 4096 (~16.7 million ETH), and encourage small-scale stakers to join DVT pools.

Node Operator Role: This role needs reputation-gating to prevent abuse by attackers.

Capital Provision: Open for permissionless capital provision.

Penalties: Consider capping penalties, e.g., to 1/8 of the total provided stake, to reduce trust in node operators.

Approach 2: Two-Tiered Staking

Key Idea: Introduce two layers of stakers - a "heavy" layer (4096 ETH requirement) participating in finalization and a "light" layer with no minimum requirement but adding a second layer of security.

Finalization: Both layers need to finalize a block, and the light layer requires >= 50% of online validators to attest to it.

Benefits: Enhances censorship and attack resistance by requiring corruption in both layers for an attack to succeed.

Use of ETH: The light layer can include ETH used as collateral inside applications.

Drawback: Creates a divide between small-scale and larger stakers, making staking less egalitarian.

Approach 3: Rotating Participation (Committees but Accountable)

Key Idea: Adopt a super-committee design where 4096 currently active validators are chosen for each slot, adjusting the set during each slot for safety.

Participant Selection: Validators with arbitrarily high balances participate in every slot; those with lower balances have a probability of being in the committee.

Incentives: Balance incentive purposes with consensus purposes, ensuring equal rewards within the committee while weighting validators by ETH for consensus.

ETH Requirement: Breaking finality requires an amount of ETH greater than 1/3 of the total ETH in the committee.

Complexity: Introduces more in-protocol complexity for safely changing committees.

The remaining work is in deciding which of the three approaches we want to go with, or if we want to do something else entirely.

Roadmap to SSF

Single-slot finality is not expected to be live for several more years as it’s still in the research stage. Lots of the approaches to SSF are still being researched, and some are still even being conceived. Once the right approach is selected by the community, the heavy work on the upgrade can begin (like specs and testing).

Secret Single Leader Election (SSLE)

What is SSLE?

In the current Proof of Stake consensus mechanism, the Beacon Chain elects the next 32 block proposers at the beginning of each epoch. The list of block proposers includes their IP addresses, which opens the door for potential attackers to target these validators with denial-of-service (DoS) attacks and effectively prevent them from proposing blocks on time.

SSLE could prevent DoS attacks by only revealing the block proposers identity after the epoch is finalized.

In an SSLE world, a group of validators could register to participate in a series of elections, with each election choosing only one leader (ergo single in SSLE). Validators in the group submit commitments to a shared secret, which are then shuffled to prevent identification by others (validators retain knowledge of their own commitment). Then, one commitment is randomly selected, and if a validator recognizes it as their own commitment, they know it's their turn to propose a block.

SSLE can improve privacy for validators, help them avoid DoS attacks, and also reduce protocol waste (by only electing a single leader).

Motivation

Let’s imagine an attacker who is selected as the block proposer for the next slot (slot n+1). The attacker could launch a denial-of-service (DoS) attack on the proposer in the current slot (slot n), causing them to miss their chance to propose a block. If the DoS is successful, the attacker can then “fill the shoes” of the proposer they attacked, and become the leader of that slot. This gives the attacker control over two slots in a row (slot n and slot n+1), which means they have control over the transactions related to these slots. Having two blocks worth of transactions in their control means that the attacker can decide what to do with two blocks worth of MEV, which isn’t ideal.

A big problem with DoS attacks is that they are much more likely to impact individual home validators than they are to impact institutional validators. This is because the big boys have more resources and expertise to defend themselves against attacks compared to the little guy. If individual home validators are disproportionately impacted by these attacks compared to institutional validators, power will centralize to the larger players. Secret Single Leader Election (SSLE) is a possible approach to avoid centralization dynamics playing out at the validator level.

Benefits of SSLE

Protects against denial of service (DoS) attacks

Helps avoid power centralizing to institutional validators

Avoids potential forks, and wastes less effort (this is because SSLE only elects one leader, unlike other SLE implementations)

Relevant Research/Proposals

SSLE: Single Secret Leader Election from Dan Boneh, Saba Eskandarian, Lucjan Hanzlik, and Nicola Grecois is the OG paper on SSLE.

Whisk: Whisk is the leading implementation of SSLE. It is a privacy-preserving protocol for electing block proposers on the Ethereum beacon chain.

SnSLE: SnSLE (Secret non-single leader election) is another option. It aims to mimic the randomness of block proposals in POW systems. A possible way to do this would be using the RANDAO function, which is already used today to randomly select validators. We can use RANDAO to combine independent validators hashes to generate a random number, which forms the basis of the SnSLE proposal. We can use these hashes to choose the next block proposer, for example, by using the lowest value hash.

Simplified SSLE: sSSLE is a maximally simple version of SSLE, research by Vitalik.

SLE: Secret Leader Election research from ethereum.org.

Roadmap to SSLE

SSLE is still in the research phase.

Recently, dapplion released a stable devnet that is running a proof of concept (PoC) of Single Secret Leader Election on a fork of Lighthouse & Geth. Read more about it here.

Fork Choice Improvements

The current fork choice rule in Ethereum is LMD-GHOST. Ethereum’s LMD-GHOST works in tandem with Casper FFG to achieve consensus, colloquially known as Gasper.

Casper FFG = Casper Friendly Finality Gadget

LMD-GHOST = Last Message Driven Greediest Heaviest-Observed Sub-Tree

Gasper is a hybrid (or a compromise) between chain-based consensus & traditional BFT consensus, the two most popular consensus models in Proof of Stake blockchains. Things like Cardano, Polkadot, and others use longest chain protocols (chain based consensus). Things like Tendermint and Hotstuff fall under asynchronous BFT protocols - the Flow blockchain uses Hotstuff, and Cosmos uses Tendermint. And then you have Ethereum which is trying to be both.

Motivation

The LMD-GHOST fork choice rule is a notoriously weak part of Ethereum’s PoS consensus protocol. The interface between Casper FFG and LMD-GHOST is complex and has led to a attacks (such as balancing attacks and “withholding and saving” attacks), along with more inefficiencies still being uncovered. Getting rid of LMD-GHOST would reduce lots of complexity in regards to the consensus protocol, and would therefore harden the security of the system.

“The ‘interface’ between Casper FFG finalization and LMD GHOST fork choice is a source of significant complexity, leading to a number of attacks that have required fairly complicated patches to fix, with more weaknesses being regularly discovered.”

Benefits of Improving the Fork Choice Rule

Reduce lots of complexity

Could help avoid several types of attacks

Relevant Research/Proposals

RLMD-GHOST: Recent Latest Message Driven GHOST: Balancing Dynamic Availability With Asynchrony Resilience

Goldfish: Goldfish is a drop-in replacement to LMD-GHOST and is much more performant. Put forward by Francesco D’Amato, Joachim Neu, Ertem Nusrat Tas & David Tse, Goldfish is a promising proposal.

Casper CBC: Casper 101 covers Casper Correct-by-Construction from Vlad Zamfir.

View-Merge: View-Merge as a Replacement for Proposer Boost

Single Slot Finality: Reorg Resilience and Security in Post-SSF LMD-GHOST from Francesco.

Fork Choice: eth2book/forkchoice from Ben Edgington covers Ethereum’s fork choice and the history of it in great detail.

View Merge

*This section was removed from the most recent roadmap iteration, but I’m going to leave it here*

View merge is a proposed upgrade that targets the fork choice rule (LMD-GHOST), which is susceptible to two types of attacks:

Balancing attacks

Withholding and Saving attacks

The idea of view-merge is to empower honest validators to express their views on the fork choice, which will help mitigate both kinds of attacks. By empowering honest validators to vote on the head of the chain, you can reduce the chance that malicious validators get to split the vote and reorg the chain.

From the view merge proposal:

“View-merge can almost be viewed as a reorg resilience gadget, able to enhance consensus protocols by aligning honest views during honest slots. In LMD-GHOST without committees, i.e. with the whole validator set voting at once, it is sufficient to have reorg resilience under the assumptions of honest majority and synchronous network. With committees, i.e. in the current protocol, it is not quite enough for reorg resilience because of the possibility of ex ante reorgs with many adversarial blocks in a row and thus many saved attestations. Nonetheless, the view-merge property is arguably as close to reorg resilience as we can get while having committees.”

High Level

Attesters freeze their fork-choice view Δ seconds before the start of a slot.

Proposers propose based on their current view of the chain's head at the beginning of their slot.

Proposers reference all the attestations and blocks used in their fork-choice in a p2p message that accompanies the block.

Attesters include the referenced attestations in their view and make attestations based on the "merged view."

Conditions For The Mechanism To Work

Network delay < Δ ensures the merged view aligns with the proposer's view, preventing balancing attacks.

Fork-choice output depends solely on the view or referenced objects. Network delay < Δ implies agreement on the fork-choice output and honest validators attesting to honest proposals.

Benefits of View Merge

Not abusable by proposers

More powerful reorg resistance

Higher resistance to balancing attacks

Compatible with dynamic-availability

Related Research/Proposals

Quantum-Safe Aggregation-Friendly Signatures

Quantum computing has the potential to break traditional cryptographic algorithms, such as the widely used RSA and elliptic curve based algorithms. And although quantum computing is probably a few decades away from being a serious threat, all blockchains rely on cryptography and therefore need to be ahead of the curve when it comes to quantum risks. In the context of Ethereum, one of the risks we have is the BLS signature scheme, which relies on elliptic curve cryptography and is known to be broken by quantum computers.

Quantum-safe aggregation-friendly signatures are being considered to enhance protocol security against quantum attacks.

Quantum safe: Cryptographic operations are protected from future quantum attacks.

Aggregation friendly: A signature scheme that can aggregate large amounts of signatures in an efficient manner.

Related Research/Proposals

BLS-Scheme: Aggregation-friendly but not quantum-safe.

STARK-Based Scheme: Quantum-safe but not very aggregation-friendly.

Lattice-Based Scheme: Quantum-safe but not very aggregation-friendly.

Horn: Signature aggregation scheme.

Increase the Validator Count

One of Ethereum's long-term goals is to safely increase the validator count (following an implementation of SSF).

Ways to Increase the Validator Set:

Continue trying to keep hardware requirements as low as possible to allow as many people as possible to participate.

Continue riding the coattails of constant improvements to hardware and bandwidth.

The Surge: Massive Scalability Increases For Rollups Via Data Sharding

Goal: 100,000 transactions per second and beyond (on rollups).

Status: I’d say we are about 35% of the way to completing the Surge section. EIP-4844 is complete and has shipped as of March 13, 2024, however there is still tons of work to be done on the other stuff like danksharding, fraud provers, zk-evms, and quantum safe/setup-free commitments.

What’s Been Implemented?

EIP-4844 Specification is complete!

EIP-4844 Implementation is also complete!

Handful of zk-EVMs: A few zk-EVMs released in 2023, like zkSync Era (March 24th), Polygon zkEVM (March 27th), Linea (July 18th), Scroll (October 8th)

Optimistic Rollup Fraud Provers (Whitelisted Only)

What’s Left To Implement?

Danksharding (Full Rollup Scaling)

Optimistic Rollup Fraud Provers

Quantum-Safe and Trusted-Setup-Free Commitments zk-EVMs

Improve Cross Rollup Standards + Interop

Basic Rollup Scaling: EIP-4844

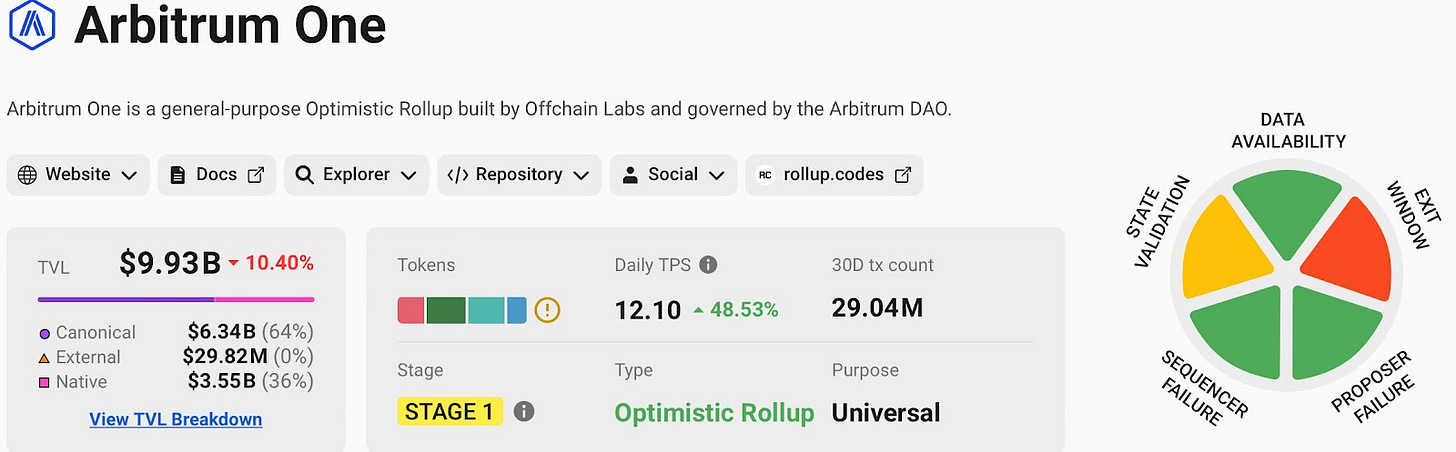

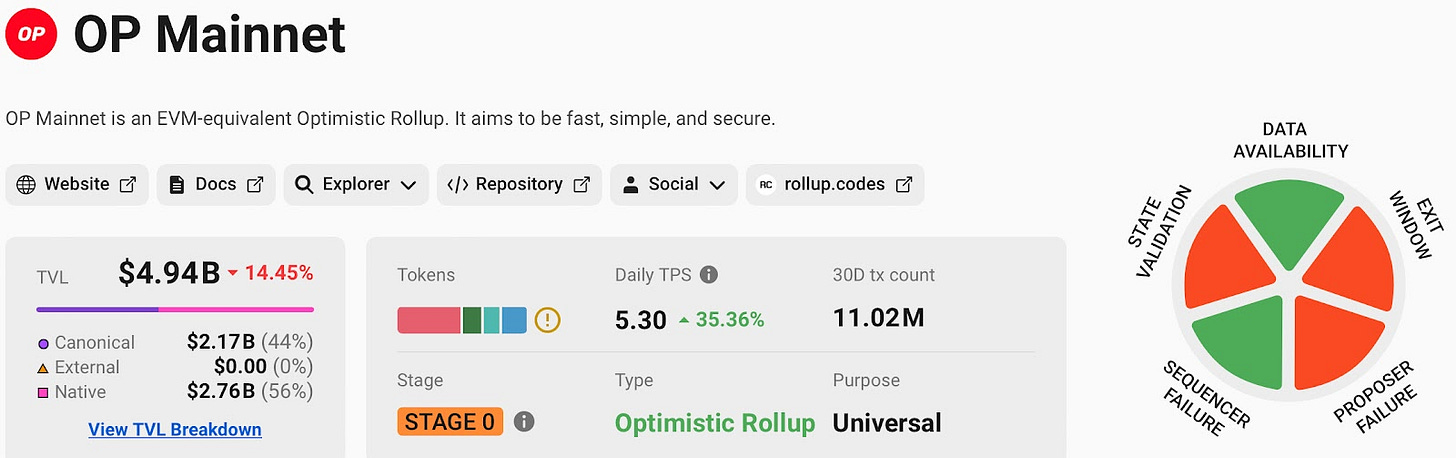

EIP-4844 is an upgrade that changed the way rollups post data to the Ethereum L1. Instead of using call-data, which is very expensive, rollups now use a new data format, called blobs.

Motivation

Rollups have been chosen as the preferred scaling method to bring Ethereum to mass adoption, as evident by the rollup-centric roadmap. Using a rollup on Ethereum costs significantly less than using mainnet - transfers are currently $.05 on Optimism vs $.85 on mainnet and swaps are $.11 on Optimism vs $4.26 on mainnet. This is great and shows significant progress from a few years ago when rollups weren’t even around. And though it's all well and good that rollups are much cheaper to use than mainnet, they are still just too expensive for many Ethereum users, which is a problem that is only going to accelerate as more and more people are onboarded.

The EIP-4844 upgrade targeted this exact problem: making rollups cheaper. Specifically, the upgrade changed how data is handled by Ethereum and its rollups. Let’s first discuss how data was handled on Ethereum pre EIP-4844, followed by how it will be handled post EIP-4844.

Pre EIP-4844

How data was handled pre EIP-4844: rollups received transactions from users offchain and put them into a “batch” of transactions that was then posted to the L1. The data associated with all of the transactions inside a batch is known as calldata. Rollups (and therefore their users) had to pay for calldata to be posted to Ethereum, and it was not cheap. Posting calldata was amounting to anywhere between 90-99% of rollups expenses. The rollups couldn’t get around the fact that they had to post calldata to the L1, that is unless they wanted to start posting their data offchain and become a validium. For rollups to be cheaper to use without sacrificing their security properties, they had to transition from calldata to a cheaper solution.

Enter: Blobs

Post EIP-4844

How data is handled today (post EIP-4844): Blobs were introduced as part of the EIP-4844 upgrade. Blobs introduce a separate gas market (blobs) and a separate gas type (blob gas, or data gas) for rollups to use, effectively replacing calldata as a cheaper solution (calldata is priced around 16 gas/byte while blob data is priced around 1 data gas/byte). Blob data is verified by consensus nodes via a *sidecar* implementation. It's important to note that blobs can’t be accessed by EVM execution, and only the commitment to the blob can be accessed. This is because blobs only exist in beacon nodes, and not in the execution layer. This setup means that future work on danksharding is contained to the beacon node (the consensus layer) and no work needs to be done on the execution side, which is a big plus.

Blobs let Ethereum rollups to transition to a new format for posting data and start fresh instead of forever trying to optimize and compress calldata to make it cheaper.

*Sidecar*: A sidecar is an auxiliary piece of tech that’s used to enhance the scalability & efficiency of a blockchain and their applications. Sidecar’s can provide things like additional functionalities, data storage, or off chain tx processing. A great example of sidecar technology is MEV-boost.

Pruning

Post-EIP-4844, consensus nodes are required to download all of the data from the beacon chain (this is unlike post-danksharding where nodes will instead download a piece of sharded data via DAS). EIP-4844 contributors recognized that requiring consensus nodes to download all this data from the beacon chain without proper intervention would completely congest the nodes, and so to alleviate storage requirements the data blobs are pruned from the nodes after roughly two weeks (18 days in this case). The goal is to have the data available long enough so that any participants in an L2 ecosystem can retrieve it, but short enough so that disc use remains practical.

Why is Pruning Acceptable?

Pruning the data is acceptable because rollups only need data to be available long enough to ensure honest actors can construct the rollup state. The rollups don’t need the data forever, and so unnecessary data can be pruned after an agreed upon date (18 days). It’s important to note that although the data is pruned, it is not lost, as third parties can step in, such as:

Layer 2s

Decentralized Storage Networks (IPFS, Arweave)

DACs

Voluntary Node Operators

Specialized Data Hosting Services (built specifically for storing pruned blobs)

Academic/Research Institutions

Archive Nodes

Benefits of EIP-4844

Cost Reduction: Blobs are already much cheaper for rollups to use than calldata (EIP-4844 lowered rollup fees by a factor of 5-10x).

Good Intermediate Solution: Danksharding is the endgame of Ethereum, but will take a looong time to implement. Rollups are live now, and so waiting a few years for them to get cheaper is not feasible. The EIP-4844 upgrade made rollups cheaper in the interim while we wait for danksharding.

EIP-4844 Makes the Transition to Danksharding Much Easier: Aside from cheaper costs, EIP-4844 makes the eventual transition to danksharding much easier. This is because many of the features that will be implemented as part of EIP-4844 will also be needed in the danksharding upgrade. Here are some of the danksharding upgrades that will piggy-back off of EIP-4844:

The data-blob transaction format.

KZG commitments to commit to the blobs.

All of the execution layer logic required for danksharding.

Layer separation between beaconblock verification and DAS blobs.

Most of the beaconblock logic required for danksharding.

All of the execution/consensus cross verification logic required for DAS.

A self adjusting independent gas price for blobs.

The Complexity of the Danksharding Upgrade is Isolated to the Consensus Layer: To get from EIP-4844 to full danksharding, a few things have to be done:

1.) PBS

2.) DAS3.) Enabling 2D sampling

4.) In-protocol requirement for each validator to verify a piece of sharded data

Notice that all of this work needs to be done on the consensus layer and not the execution layer. This means that following EIP-4844’s introduction, the execution layer client teams, rollup developers, etc, will be ready for danksharding and won't have to implement any more changes or do any more work to get ready for the upgrade. This really simplifies the implementation of danksharding following EIP-4844, and is a very underrated benefit of the upgrade.

Isolating Future Sharding Work to the Beacon Node: Blobs only exist in the beacon node (aka the consensus client) and not the execution layer (meaning blobs exist in Lighthouse and not Geth, for example). This means that blobs can’t actually be accessed by EVM execution, and only the commitment to the blob can be accessed by EVM execution. This setup means that any future sharding work falls on the beacon node and the beacon node alone, allowing the execution layer to work on other upgrades.

KZG Ceremony

The KZG ceremony consists of a group of participants that come together to use certain secret information to generate different data sets. Each participant has a job of generating unique data which is then used to generate the parameters needed. We trust these participants to (i) generate secrets, (ii) use the secrets to create & publish data sets, and (iii) to ensure their contributions are kept secret and forgotten. Because we trust these participants to destroy their secrets, the KZG ceremony is known as a trusted setup.

Read More about the KZG ceremony here

Motivation

The KZG ceremony is a key part of EIP-4844 as the upgrade requires a commitment scheme for the underlying data blobs. The scheme needs to be:

Fast to prove and verify

Have small commitment sizes

KZG commitments (aka Kate commitments) meet both of these requirements, and because of that they have been chosen as the commitment scheme for data blobs in EIP-4844.

Trusted Setups

To break down trusted setups more, the ‘unique data’ supplied by a participant is just a secret number, which is combined with other participants secret numbers to create a larger, random number. The final number has to be unknown to anyone, otherwise the entire proof system is vulnerable.

One of the most famous trusted setups in the crypto industry was done by Zcash in 2016. It’s a pretty fascinating story, you can read more here.

Why is a KZG Ceremony Needed?

EIP-4844 needed a commitment scheme to serve the underlying data blobs, specifically one that is fast to prove/verify with small commitment sizes. KZG commitments fit the criteria and so they were selected. The KZG ceremony is used to generate KZG commitments -> KZG commitments generate KZG proofs -> KZG proofs commit blobs of data.

Fun Facts

The name proto-danksharding pays homage to two core contributors - the ‘proto’ part comes from proto-lambda (Proto) and the ‘dank’ part comes from Dankrad Feist (Dankrad).

KZG commitments are named after their creators: Aniket Kate, Gregory M Zaverucha, and Ian Goldberg. They wrote the paper in 2010 (Original Paper).

The KZG ceremony for EIP-4844 received 141,416 contributions from different contributors, making it the largest trusted setup in history.

EIP-4844 introduces a new pre-compile called “point evaluation”. Its job is to verify a KZG proof, which claims that the blob (represented by a commitment) consists of the correct data.

Read More: EIP-4844

Roadmap to EIP-4844

EIP-4844 is complete! It was rolled out as part of the Dencun (Deneb + Cancun) upgrade on March 13th, 2024. Next up, danksharding!

Full Rollup Scaling: Danksharding

Danksharding is an evolution to EIP-4844 and is looked at as part of the endgame to scaling Ethereum.

Motivation

The motivation to the danksharding upgrade stems from the same place as EIP-4844: making rollups cheaper. Much cheaper. Rollups are very data heavy, and so they need scaling solutions that are designed to handle data.

Now, EIP-4844 is great because it 1.) achieves the goal of making rollups cheaper, and 2.) it can be implemented much earlier than danksharding. HOWEVER, EIP-4844 just doesn’t provide enough scale for the long term - which brings us to danksharding.

First of all, what is Sharding?

I’m going to briefly go over sharding, Ethereum's original scaling design, colloquially known as ETH 2.0. Read more here.

Sharding means to divide up a network into smaller components called shards. Ethereum’s original sharding design had validators split up between shards (64 of them) who took on the responsibility of storing data and handling transactions in their respective shard. By distributing the workload, the shards increase the throughput of the network, and is why Ethereum initially had plans of adopting the design (although it was eventually scrapped). The conclusion was that the security properties of the shards were not strong enough and that there was too much complexity involved in things like cross-shard communication and shuffling validators.

Ultimately, the rollup-centric roadmap emerged and danksharding replaced the original execution sharding design.

Danksharding

Danksharding (aka data sharding) is looked to be the final boss of scaling solutions on Ethereum. Instead of deploying execution shards like previous designs (ETH 2.0), Danksharding uses data shards. As the name implies, the focus has switched from sharding execution to sharding data. Full nodes & light nodes (the consensus layer) can ensure the data is available by downloading it.

EIP-4844 vs Danksharding

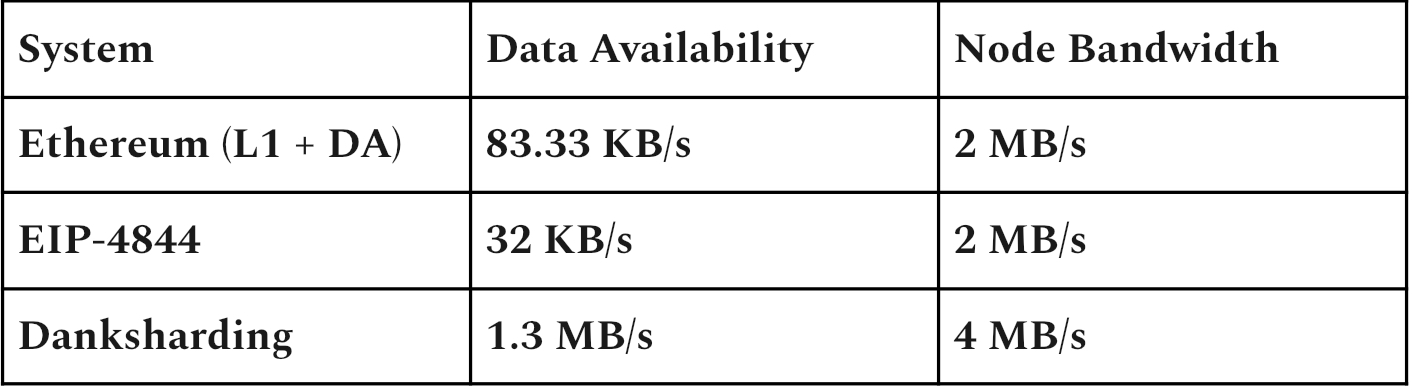

Danksharding is an evolution of EIP-4844. It is built on much of the same foundations as its predecessor, like the blob for example. Both Danksharding and EIP-4844 use blobs, though Danksharding plans to increase the maximum amount of blobs allowed to be in a block, which is a big advantage. More blobs/block = more data/block, which = more txs/block. For reference, EIP-4844 plans to have 3 blobs per block, with each blob offering 0.125MB of space for data.

Another big advantage for Danksharding relative to EIP-4844 is that data availability sampling (DAS) will be used in the process. DAS is a mechanism that allows light clients verify that the data in a block is available by only downloading a portion of it. This is unlike EIP-4844, which requires nodes to download all of the data in a blob. Dankshardings approach is much more efficient.

DAS is way more efficient than downloading an entire block and its implementation will lead to much more nodes participating in data sampling, which means more scale. The more nodes there are, the more data can be handled.

Concrete Numbers

When implemented, Danksharding will increase the maximum number of blobs allowed per block relative to EIP-4844. Danksharding will increase the amount of data available by a factor of around 60x, bringing the available data from around 0.5MB/block (EIP-4844) to around 30MB/block (danksharding). h/t a16z

h/t Sreeram Kannan

Merged Fee Market

Previous sharding designs were much more complex as they had a fixed number of shards that each had distinct blocks and distinct block proposers. Danksharding improves on the design by merging all of this together into one merged fee market. This means that there will only be one proposer who chooses all the transactions & all the data going into a slot, which will massively reduce the complexity of the network.

Benefits of Danksharding (& the Surrounding Upgrades; PBS, DAS)

Lower Fees: Danksharding massively reduces costs for rollups and therefore fees for end users.

Merged Fee Market: Unlike previous designs which had a fixed number of shards that each had distinct blocks and distinct block proposers, danksharding merges the fee market. This means that there will only be one proposer choosing all transactions & all data going into a slot. This massively reduces the complexity of the network.

Redirect Scaling Focus: Finishing the danksharding upgrade would not only be great because 1.)Ethereum would finally have a really solid way to handle data for the long term, but also because 2.)Ethereum could redirect all scaling energy from danksharding to the state side of things and focus on implementing things like state expiry and statelessness (this is really important because long term, state is the bottleneck, not data).

Synchronous Calls Between ZK-Rollups and L1 Ethereum Execution (potentially): Transactions from a shard blob can immediately confirm and write to the L1 because everything is produced in the same beacon chain block.

Improved Censorship Resistance: Splitting the responsibilities of block proposers and block builders makes it much harder for block builders to censor transactions. Things like inclusion lists can be used to make sure no censorship has taken place before the block is proposed.

Proof of DA with Fewer Resources: (Full or light) nodes can be sure that a blob is available and correct by only downloading a small random portion of the blob and not the entire thing.

Reduced MEV: PBS could reduce the risk of frontrunning substantially. This is because pre-PBS-proposers are looking at the highest paying priority fee txs in the mempool, which they can frontrun. Post-PBS-proposers cannot frontrun, as they cannot see the contents of the block. Their job is just to accept the highest bid.